A Short Recap of a Long History

Advancements in augmented reality have generated growing excitement over the past few years. And demand for creative and innovative approaches that deploy this new technology are on the rise. The AR market has witnessed several seemingly magical AR implementations. And, while the rate of growth in the segment is certainly noteworthy, so too is the fact that we’re really just at the beginning of our AR journey. There is so much exciting innovation yet to come.

Enrich your real world with digital flavour!

Most contemporary AR experiences are entertaining and fun – designed to add a new twist on reality for consumers. But, opportunities for creativity in this space are endless. To better understand where our experience of AR is heading, first let’s have a look at where we’ve been.

Released in 2016, Pokemon Go is a milestone in AR. As its popularity grew – outside of the usual research and tech-focused circles – it began to seem as though just about everyone was chasing virtual characters through the real world, creating an almost “surreal” experience.

While Pokemon Go certainly accelerated the wider conversation around AR, it was, by no means, the beginning of the story.

With Google’s Project Tango – an AR-focused device released in 2014 – we got an initial taste of more advanced AR experiences. Experiences that are able to incorporate a sense of physical space in the digital realm by using more accurate spatial tracking.

Prior to Project Tango, Google released Google Glass in 2013. It was its first AR-like device and the first consumer-focused AR headset, leading Microsoft’s HoloLens by three years. Fairly controversial at the time, Google Glass sparked important conversations around privacy, hidden or concealed cameras, machine learning, and AR.

So it all started with those glasses, then?

Nope! AR has been around for a while. Beginning in 1998, American football fans could see the first-down line on the field during television broadcasts and fans of Rest-of-the-World football could similarly see the offside line seemingly projected onto the field. A further development of creator Sportvision’s concept behind their widely-despised FoxTrax feature was that the addition of computerised images to live sports introduced a functional new dimension to reality.

Enhance human performance!

Predating our experience of AR in sports broadcasts, the first immersive augmented reality system dates back to 1992. Virtual Fixtures (telepresence system) was developed by Louis B. Rosenberg at the U.S. Air Force Armstrong Labs. Rosenberg’s goal was to prove that, working together, AR and robots could enhance human performance. Rosenberg demonstrated this by using the Pegboard Task, an exercise that is based on Fitts’s Law that states that the smaller the area of focus is, the longer it will take to a user to connect with it.

One of the most interesting aspects of Rosenberg’s experiment is the AR headset he built. In essence, it brings the user’s point-of-view (POV) much closer to the remote robotic arms. A user controls the robotic arms by remote control. However, because they are wearing the AR headset, they have the sense that the robotic arms are direct extensions of their own arms, even though they are located in a remote environment.

Prior to the first AR system there were a couple of multi-sensory systems, such as Morton Heilig’s Sensorama, as predecessors of the VR and AR we know today.

Wait, what about ghosts?

The illusory roots of AR go even deeper. In 1862, British scientist and inventor John Henry Pepper presented an illusion during a Christmas Eve performance of Charles Dickens’ The Haunted Man. The effect became known as “Pepper’s Ghost” and the same basic technique is still used today for creating AR experiences such as Tupac’s appearance onstage at the 2012 Coachella Festival, for script teleprompters and as a base for AR headsets.

Understanding Reality vs. Reality vs. Reality

Realities, Realities Everywhere!

Along with new technologies come new vocabularies, and sorting out which “reality” we’re discussing can get confusing. We’ve got virtual, augmented, mixed, immersive and other realities to contend with. So, let’s break them down, as summarized by Magic Leap.

Virtual Reality

Virtual reality provides full simulation of the user’s environment. It replaces the user’s whole world view with objects that don’t physically exist. Everything the user sees is digital, i.e. virtual.

Augmented Reality

Augmented reality combines a real world environment with any sort of digital objects, overlays, user interface, or effects. Users sense both physical and digital experience in their field of view.

Mixed Reality

Mixed reality is an extension of AR. The digital objects in the user’s field of view are placed into the real world seamlessly, as if they belong there. It requires the most advanced surface mapping algorithms to create the perfect illusion for users.

All the above can be grouped together and called extended reality (XR) or immersive reality.

In the future we can expect even more nuanced definitions. For instance, former Intel CEO, Brian Krzanich, has introduced the concept of merged reality – combining interactions between digital and physical objects – as another new avenue for ongoing research and development. Invisible Highway is a good example combining AR with and a physical robot developed by Jam3 in collaboration with Google.

Hopefully this short overview has made multi-realities more clear. Now, how do we actually experience contemporary Augmented Reality?

How We Experience AR

AR headsets

Latest addition to AR headset family is second edition of Microsoft’s HoloLens, introduced in February 2019, currently with preorders available. Judging by the technical specifications, HoloLens 2 will certainly be the most advanced AR headset to date and I can’t wait to get my hands on it.

In 2018, after years of prototyping, Magic Leap has released their AR headset and you can order this sleek, futuristic-looking headset on their website.

Leap Motion also released their Project North Star in 2018. Interestingly, it’s open source, so you can try to build your own AR headset if you’re feeling crafty.

While for Microsoft HoloLens (2016) we have seen second generation HoloLens 2 (2019), the Google Glass (2013) was discontinued by Google in 2015.

In the near future we will probably see more and more compact AR headsets. More major advancements are likely five or more years away, though. Facebook has confirmed that they’re working on AR glasses and Apple, as usual, is rumored to be making their own. So, there will surely be some exciting developments over the next few years.

A little further into the future, we might also see more advanced concepts such as a liquid crystal-based contact lens display, which could lead to the first AR lenses.

Screen Devices

Much more readily available for consumers are screen devices. Every modern mobile phone can be turned into an AR device by opening an application or a website that includes an AR experience.

For installations, LCD or LED walls, combined with a camera feed, can provide an interactive AR experience on a larger scale. One of the bigger areas of opportunity I currently see is with transparent multi-touch LCD screens that could enable new and exciting AR experiences. And, in a sort of separate category, we’ve also seen the development of holograms using a projection pyramid. That’s another modern AR experience based on the good old “Pepper’s Ghost” effect!

Worldview vs. Camera Feed

There’s an important distinction to make between more enclosed AR experiences that use a camera feed POV to frame the experience, and those that incorporate AR into a more open worldview. While using a mobile device or LCD/LED screen, the user consumes both digital and real world elements mediated through the screen. The entire experience occurs within the enclosed frame of the screen. However, by wearing a headset or using transparent LCD or holograms, users sense real world elements as usual, within their field-of-view. And additionally, they sense virtual content literally augmenting this same POV making this much closer to a true AR experience.

Bringing AR Experiences to Life

Developing AR Experiences

Creating AR experiences for AR headsets usually means building an app using a game engine such as Unity or Unreal, together with a supported plugin released by manufacturers, or using the headset’s native SDK.

For mobile application, both Google and Apple released their AR SDKs – ARCore for Android and ARKit for iOS – and both include support for game engines. For some more advanced features it’s worth considering Vuforia, 6D.ai and look at other AR Cloud startups such as Blue Vision (recently bought by Lift) or Fantasmo.

Social media platforms provide a way to deploy AR experiences to their mobile applications. The experiences are created in the social-media-specific development environment. For example, in Spark AR Studio for Facebook and Instagram effects, and in Lens Studio for Snapchat.

For web applications there is Web AR, which is a website built on HTML, CSS, Javascript and WebGL, together with AR libraries such as AR.js, 8thWall, TensorflowJS.

Native AR ≠ WebAR

WebAR took a big step forward in 2018. Previously, for WebAR experiences there was an option to use face recognition or create experiences leveraging image recognition without surface tracking. For prototyping WebAR using plane detection we had to lean on an Experimental Chrome version to support native ARCore or ARKit, which were not suitable for production projects. Late in 2018, though, 8thWall brought the key “plane detection” feature into modern web browsers for both Android and iOS. For image recognition, we had a very exciting release of the TensorflowJS library earlier in 2018, which runs directly in the web browser.

Plane detection in the web browser based on Javascript! Just wow! Very exciting. Check @the8thwall and try it yourself: https://t.co/u0CvmFMSp6 #AR #AugmentedReality #WebAR pic.twitter.com/HQXwgkRQH2

— Peter Altamirano (@peteraltamiran0) September 13, 2018

For marker-based WebAR, 8thWall released long-awaited feature Image Targets in April 2019. Prior to this release there was a necessity to have certain markers with a border – rather than a random image, however, with the latest Image Targets feature you can detect and track multiple images in the web browser. Together, these updates create much more WebAR opportunities.

Overall, when considering WebAR experiences, we need to account for limited performance, limit camera movement of the user, and aim for low polygon-based experiences. On the other hand, WebAR maintains its biggest advantage in not requiring downloads and remaining easily shareable.

General AR Watchouts

While AR is getting more advanced and precise, there are still a couple of limitations and good practises to follow to ensure ideal environment for best accuracy:

- Lighting conditions with limited reflections, considering materials and viewing angles;

- Visible marker in the camera view and quality of the marker;

- No dynamic lighting – shadows are fixed rather than according to the real environment’s lighting conditions;

- Lower performance for WebAR than in Native AR.

Types of AR Experiences

World Tracking

Marker-based AR experiences are created by detecting and tracking a marker/logo in the 3D space. Digital content is then placed in relation to the detected marker. These are widely available for Native AR, WebAR, Lens Studio and Spark AR applications.

ARKit and Vuforia incorporate a 3D object tracking feature. It allows user to detect a physical object from all angles in the 3D space and place digital content relative to it. ARCore will soon also feature this capability.

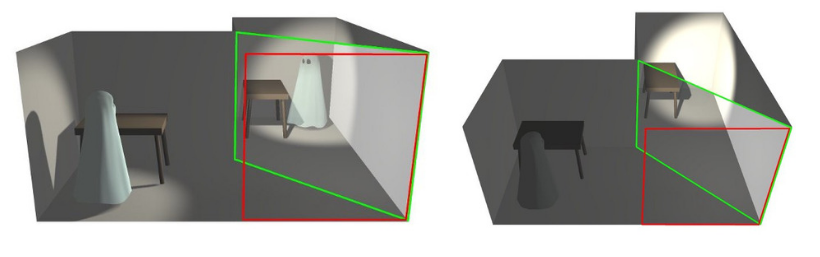

A markerless approach (Simultaneous Localization and Mapping / SLAM) doesn’t require any marker or object since it detects the surface. This allows building AR experiences around a physical environment by accounting for detected surfaces/planes.

The most advanced real-time SLAM experience I’ve had chance to test provides 6D.ai.

Human Body Tracking

Face tracking is widely used and well known since it’s available on most platforms and because it provides remarkable accuracy. Face swapping, makeup, and expression detection, are all widely available. These capabilities create near endless opportunities for AR experiences. But while face tracking is pretty advanced, other human body parts remain much harder to track. However, recently I was pleasantly surprised by the impressive accuracy of a hair detection app created by YouCam Makeup and feet tracking by Wanna Kicks App.

Hand tracking is possible using Leap Motion’s sensor together with an AR headset or screen; and it creates highly accurate AR experiences. Spark AR provides hand tracking for Facebook and Instagram filters. WebAR has pretty limited options for now, but it is possible to track hands to a certain degree.

Skeleton tracking was, at one time, unique to depth camera sensors such as Kinect. But we can now create pretty solid experiences running skeleton tracking in web browsers, as well. How about becoming a virtual character? Have fun!

Localization-based experiences are those where AR is relative to the phone position in space. Drawing has never been more fun than scribbling 3D drawings in the air using only your phone!

Where Are We Going?

The New Kid On The Block — AR Cloud

AR Cloud brings huge potential into AR. From multiplayer, to perfect localization and occlusion capabilities, as well as spatial mapping with very accurate surface detection (improved SLAM). AR Cloud brings us a step closer to mixed reality and the ability to create much more immersive AR experiences.

The Future of AR

Although our experiences still often hark back to “Pepper’s Ghost,” augmented reality has come a long way in the last few years and we’re really still just in the beginning of the journey. The coming months and years promise exponential growth, most prominently in software, machine learning, and especially in image and surface recognition. Together with new devices, improved computing power, and better cameras, we are driving AR progress forwards faster than ever and creating exciting new experiences for consumers.

No less impressive are the hardware upgrades also in development. Oculus’ Chief Scientist, Michael Abrash’s predictions for VR and AR are well worth watching. Fascinating progress is also being made using audio instead of image for AR experiences, in particular with the latest AR Audio Sunglasses from Bose.

The next few years will be extremely exciting as we witness AR advances in retail, redefining user shopping experience, entertainment, and transport as well as groundbreaking and lifesaving developments in education and medical applications.

So that’s a little taste of where we are and some of where we’ve been. What about augmented reality excites you?

Guest Post

About the Guest Author(s)

Peter Altamirano

Peter Altamirano is Technical Director at Jam3. He guides tech projects and teams, from frontend and backend to AR and installations. He loves exploring new technologies, bringing new ideas, prototyping, improving production processes and development. He is a creative technologist discovering new possibilities and making things happen. He has previously worked with brands like Google, Facebook, Adidas, eBay, Disney, and IBM.