Futurologists had predicted and filmmakers embodied on the screen that mankind would forget any third-party apps and devices alien to human physiology even before the first concepts of AR and VR appeared. Why should we invent another joystick, gamepad or controller, if we already have, thanks to millions of years of evolution, the most perfect controller for interaction with the environment – hands?

Current State

After significant breakthroughs in the sphere of AR and VR technology happened in recent years, and creation of relatively wide and available-for-mass consumer line of products, pent-up demand for more user-friendly interfaces (rather than for traditional controllers) began to form.

It is quite obvious that time is against existing solutions, and rapid AR/VR market expansion is restrained only by the inconvenience of interaction with the virtual environment and a still high degree of “exoticism” of these technologies. Though it is a matter of time as more and more products enter the mass market each day.

On this basis, the so-called “natural methods of control” came up and gained widespread popularity under the name Zero UI (or NoUI) concept. At the heart of this concept, there is an idea that voice, gestures, and facial expressions are the most convenient and natural interfaces of interaction, which provide the most effective and relevant interconnection between a person and computer system.

Interface Design Paradox

Some people might have heard the old UI/UX designers’ joke: “The best interface is no interface.” Our current love for digital interfaces and controllers has become uncontrollable long ago, as interface has become the answer for each design problem. How to enhance a car? Slap an interface on it and design remote control via mobile phone, so that you could give your girlfriend a lift without even leaving home. How to sell a new model of refrigerator? Let’s connect it to Wi-Fi and stuff its door with the Facebook app. Take a salad instead of a burger and immediately share it with friends – handy, isn’t it? Let’s build a web-cam in a vanity dresser and add a remote controller to take a snap, and instantly post it on Instagram. Sounds funny, yeah? Actually, these are the concepts and products, which, joking apart, already exist.

In the pursuit of interactivity UI/UX design stopped to carry out its main task – to make user’s life easier. Interaction with many modern interfaces ceased to be simple, exciting and user-friendly. Interface, as an intermediate between user and product, should simplify the algorithm, and reduce the number of actions, not increase. In theory, while interacting with a computer system, the user has to carry out smaller (or equal) number of actions than they would have to perform by hand.

Forward-Looking Solutions?

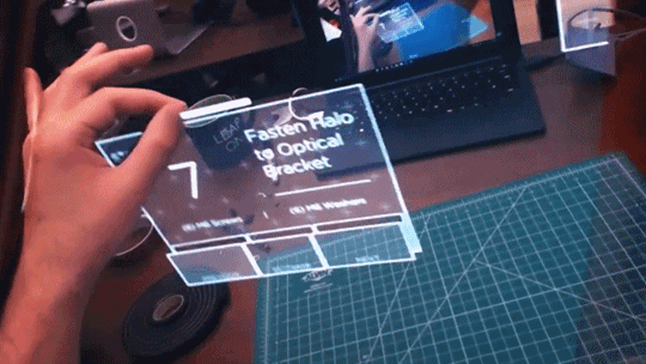

The situation is far from numerous promotion pictures, where various people wear VR glasses and manipulate objects by their hands. There is a big difference between VR-helmets (or AR-glasses) with controllers and ones that can be controlled by hand gestures.

In the case of voice, humans managed to achieve significant success (let’s remember the voice control software, voice dialers and numerous voice assistants based on voice recognition with which we will be able to communicate as with the real persons soon), while the recognition of facial expressions and gestures is quite a different story. For now, recognition of facial expressions is realized mainly in the entertainment segm

ent in the form of “masquerade masks” or animated emojis. Same goes for gesture control, it is basically used for video game industry only. And that’s it. Yes, there is Microsoft Hololens that allows its users to click and open the menu using a hand, but far more expected is the integration of one of the Leap Motion or ManoMotion (or any other) solutions with the mass-produced products. But they all are still too far from the creation of the complete kinesics-based user interface.

Importance for the Future

Obviously, there are certain difficulties on this path, impeding kinesics to become a universal language of human-computer interaction within the NoUI/Zero UI concept. For instance, it is necessary to take into consideration the variability of gestures’ semantics according to the cultural environment they are applied in.

Nevertheless, differences in gestures’ interpretation are less numerous than language diversity on our planet. Furthermore, we can get more information about a person’s desires and intentions through the so-called “body language”. It is possible to estimate the user’s mental state, attitude towards the situation, desires expressed nonverbally, or intentions stopped by consciousness – in the future all these things can be used in absolutely different spheres.

Transition towards native interfaces and realization of NoUI/Zero UI concept enable us to make a great leap in the future comparable in importance to the creation of the first visual interface, or mobile devices’ transition from physical keyboards towards touch screens. The only thing we have to do is to figure out who will manage to predict the demand and design the very interface of the future.

Guest PostAbout the Guest Author(s)

Vlad Ostrovsky

Vlad Ostrovsky is a Lead Marketing Manager at SCAND, custom software development company based in Minsk, Belarus. Vlad participated in launch and promotion of several AR/VR startups and products, he is an active follower of Zero UI/NoUI concept and author of various researches.