Snap, the company behind Snapchat, Spectacles, and more, held their annual Partner Summit online this Thursday, May 20. The event was full of announcements, releases, updates, and launches. Even focusing just on the AR content, there was a lot to unpack.

Expanding AR Capabilities With Lens Studio 4.0

Snap just updated Lens Studio earlier this spring so, to be honest, we weren’t really expecting any major software announcements from the Partner Summit. However, the Partner Summit announced Lens Studio 4.0, as well as Ghost, a new AR innovation lab partnered with Verizon for research into 5G-enabled experiences.

“Connected Lenses” now allow users to interact with digital objects together through AR. The first of these experiences, created through a partnership with Snap and Lego, went live during Snap’s presentation.

Lens creators also have access to new machine learning capabilities including 3D Body Mesh and Cloth Simulation, as well as reactive audio. In addition to recognizing over 500 categories of objects, Snap gives lens creators the ability to import their own custom machine learning models.

One company using Snap’s ML-enabled reactive audio is Artiphon, makers of the Orba and INSTRUMENT 1 digital instruments. With their new Scan Band lens, users can make music with notes generated by physical objects in their environment.

“We think of AR as going through the screen and back into the world,” Artiphon founder Mike Butera told ARPost. “We want to make music more kinetic. We really want music to be a part of everyday life.”

Expanding AR and E-Commerce

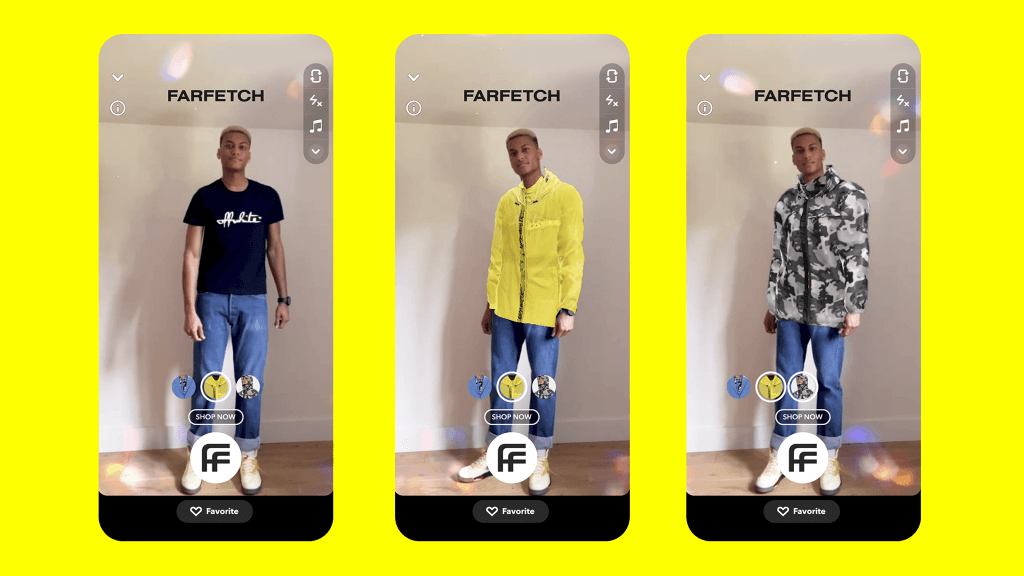

While Scan Band definitely speaks to how these tech advances make it to engaging consumer applications, Snap has an eye on e-commerce. Partners like Farfetch, Prada, and MAC Cosmetics are using the company’s new tools for voice and gesture-controlled virtual product try-ons, social shopping experiences in AR, and more.

Snap even announced a new Creator Marketplace to connect businesses running e-commerce on the platform with lens creators. This means new opportunities for lens creators, new outreach opportunities for businesses, and more fun and informational AR experiences for online shoppers/Snapchat users.

That slash between online shoppers and Snapchat users may become blurrier and blurrier in the months to come. The company also announced that Snapchat users would be able to purchase content from vendors on-platform. Snap Camera’s head of product marketing, Carolina Arguelles Navas, went so far as to call Snap “a new point-of-sale.”

Making AR Tools and Experiences More Visible

Snap isn’t just increasing the power of their AR tools, they’re making their AR tools front-and-center in the platform.

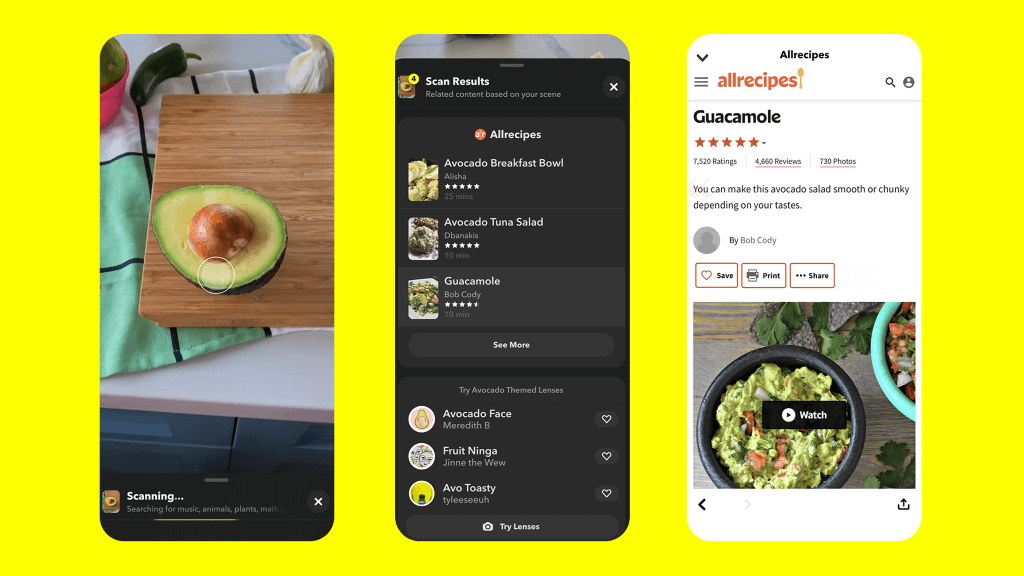

- The scan feature that recommends lenses, audio, and other features to users based on their environment as viewed through the Snap camera, is moving to the camera’s main screen.

- A standalone app called Story Studio gives users access to editing tools as well as AR effects and music so that they can publish more refined content.

- Camera shortcuts will make AR lenses and other video and image augmentation content more accessible within the app.

Into the Metaverse?

Some of the day’s announcements bring the humble “camera company” well within the as-yet ill-defined borders of the “metaverse.”

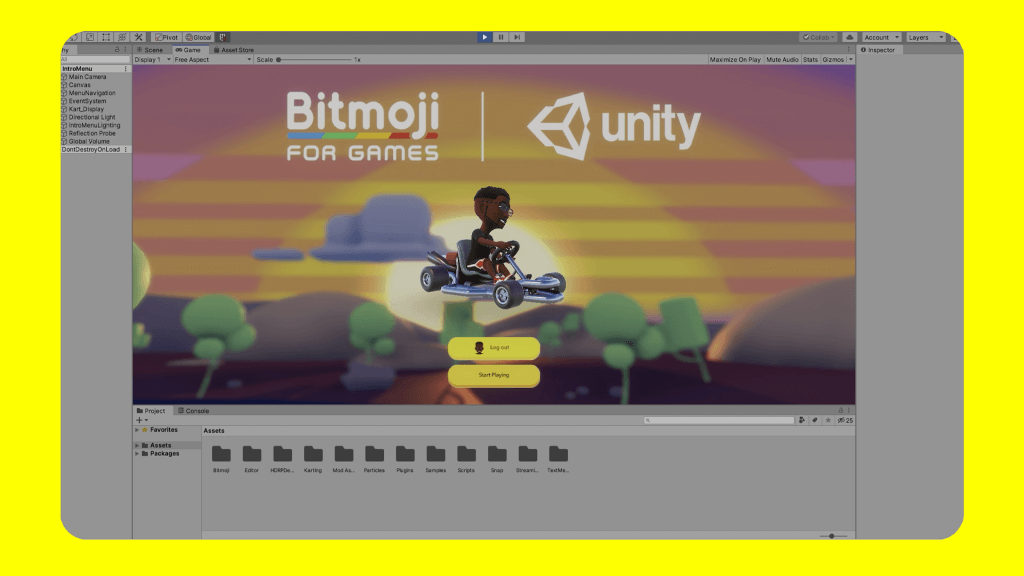

The Bitmoji software that Snapchat users can use to represent themselves on the site or play games with is now accessible to game developers using Unity. This will allow users to play as their custom bitmoji characters in mobile, PC, and even console games made by participating developers.

The Snap Map is being updated with a new layer to help Snapchat users not only find one another but also find information on businesses and events in their community. A “Memories” feature will also allow the app users to view posts tied to physical locations by visiting them on the Snap Map.

Finally, Snap’s machine learning tools are increasingly being used to augment physical items with information. Integrations with Screenshop will allow users to scan outfits to shop for clothing and a partnership with AllRecipes will allow users to browse recipes based on ingredients visible through the Snap Camera.

The Surprise Launch of New Spectacles

The big event of the day came at the very end of the Summit when CEO Evan Spiegel announced the next generation of Spectacles. Spectacles have been around for a while now, but they didn’t include displays making them essentially a head-mounted camera that wasn’t a serious part of most discussions about AR. That changed today.

The new spectacles feature dual-waveguide displays with a 26.3-degree field-of-view, and built-in touchpad control. Dual cameras allow 6-degrees-of-freedom hand and surface tracking, and a built-in microphone allows for voice commands. Weighing in at 134g, the glasses have a half-hour operating life.

Naturally, the new Spectacles come integrated with Snap’s cameras as well as full integration with Lens studio. The glasses are currently in the hands of “a select group of global creators.”

Oh, Snap

Some of the big news may have already made its way to Snap’s regular users. Other changes will roll out on the platform and in developer kits over the coming months and take still longer to manifest in the lenses and experiences that the company makes possible.

“We couldn’t be more excited about what we are building together in AR,” said Spiegel. “Our work here is far from over, and in many ways it’s just beginning.”

Indeed, not expecting much from the Partner Summit would have been forgivable. However, between surprise software announcements and using strategic partnerships to increase the reach and utility of their services, announcements made yesterday may prove a touchstone in AR history.